In the past year, we have had a lot of feedback from the market about the use of Microsoft Defender as an alternative to ESET. According to Gartner, Microsoft Defender with ATP is now a product that deserves to be in the leader quadrant. Users that have Windows 10 machines are now getting Microsoft Defender for free with the O/S and they have the option of upgrading to Microsoft Defender ATP by buying enterprise licenses (EL3 or EL5). We have noted that Microsoft has been aggressively pushing their EL licensing throughout the Microsoft channel.

After many years since entry into the endpoint security market, has Microsoft Defender become such a good product? In our opinion, no. Despite the fact that Defender has a comparable detection rate with ESET, it has remarkably higher false alarms and terribly slows down the machines that run it. SMBs that don't have malware analysts to investigate false alarms and that don't keep the pace of buying new computers every three years should be aware Microsoft Defender is an option they can ill afford.

To prove it, let's have a detailed look on the latest report1 by AV-Comparatives published on July 15, 2020. This first half-year report of the Business Main-Test Series of 2020 consisted of three tests: Real-World Protection Test, Malware Protection Test and Performance Test.

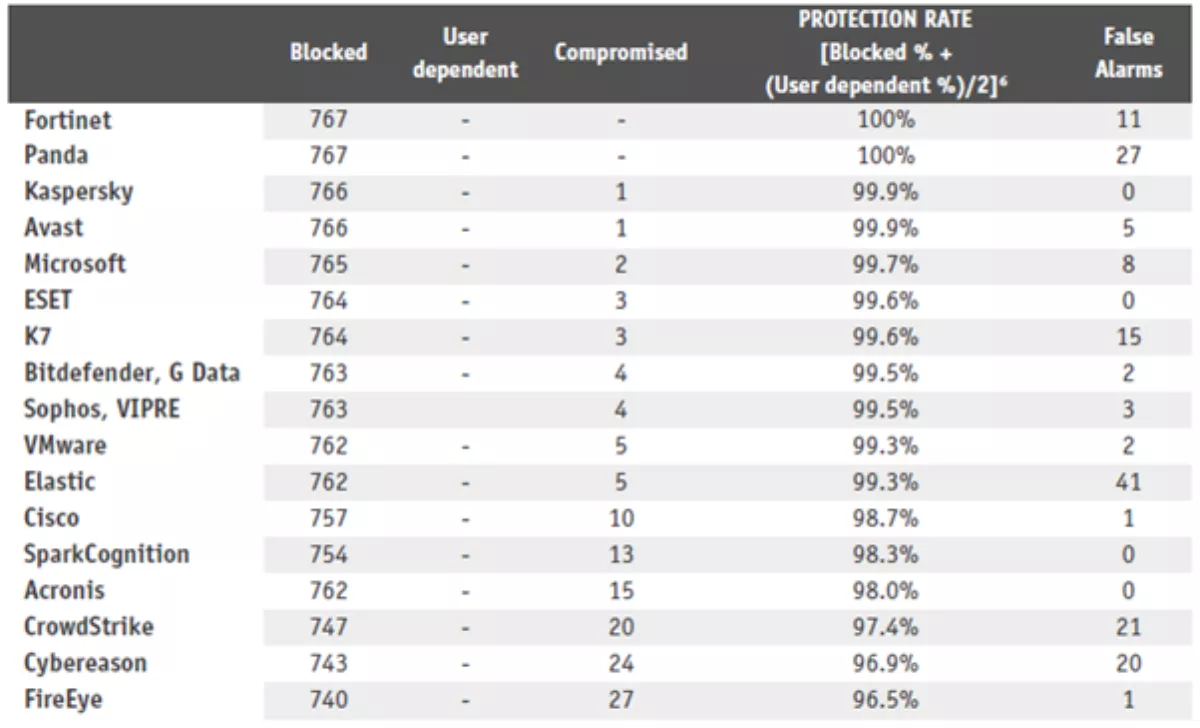

The AV-Comparatives' Real-World Protection Test mimicked online malware attacks that a typical business user might encounter when surfing the Internet. The latest test comprised 767 test cases of drive-by exploits and URLs that pointed directly to malware executables. The number of missed samples ranged from zero (the two security solutions that detected all the cases both generated a high number of false alarms) to 27; the average number of misses was six. ESET Endpoint Protection Advanced Cloud with ESET Cloud Administrator detected all but three cases and generated no false alarm. The number of false alarms ranged between zero and 41.

The Malware Protection Test considered a scenario in which the malware pre-existed on the disk or entered the test system via some other way than directly from the Internet. In this test, ESET belonged to the group of four vendors with 99.9% Malware Protection Rate – the second-best score in the test – and to the group of nine vendors with the best “Very low” False Positive Rate.

Microsoft Defender reached comparably excellent detection rates with ESET (just two misses in the Real-World Protection test and 100% detection in the Protection Test) – but at the cost of a much higher number of false alarms. Compared to ESET's zero, Microsoft had eight false alarms in the Real-World Test, which put it at 13th position among the tested vendors. In the Protection Test, Microsoft did not belong to the group of nine vendors, including ESET, with “very low” (i.e., 0-5) number of false alarms. Microsoft's Defender fell into the group of four solutions with 6-25 false alarms.

Tab. 1. Results of the Real-World Protection Test (March-June 2020) by AV-Comparatives

It's easy to create a security solution that excels in detection if false alarms are not considered a problem. Ultimately, labelling every single sample as malicious would guarantee the 100% detection rate in any test. However false alarms pose a huge problem, especially for SMBs who don't have the right resources.

Each false alarm may require an administrator to spend around 30 minutes to investigate. One can then deduce that with eight false alarms, around four productive hours are wasted on investigations that lead the IT administrators down a rathole.

Besides detection rates and false alarms, the impact of security solutions to the performance of the machines they run on is also important for SMBs. We must consider how much of that machine's computing, and processing power are taken up by the endpoint. The Impact Score of the endpoint is important as New Zealanders, in general, try to extend the life of each machine as long as possible; in many instances they are kept longer than the global industry standard of 3 years.

An excellent Impact Score ultimately affects the user experience and hence reduces the cost of expensive replacements of the endpoint. Besides, slower machines also mean lower worker productivity.

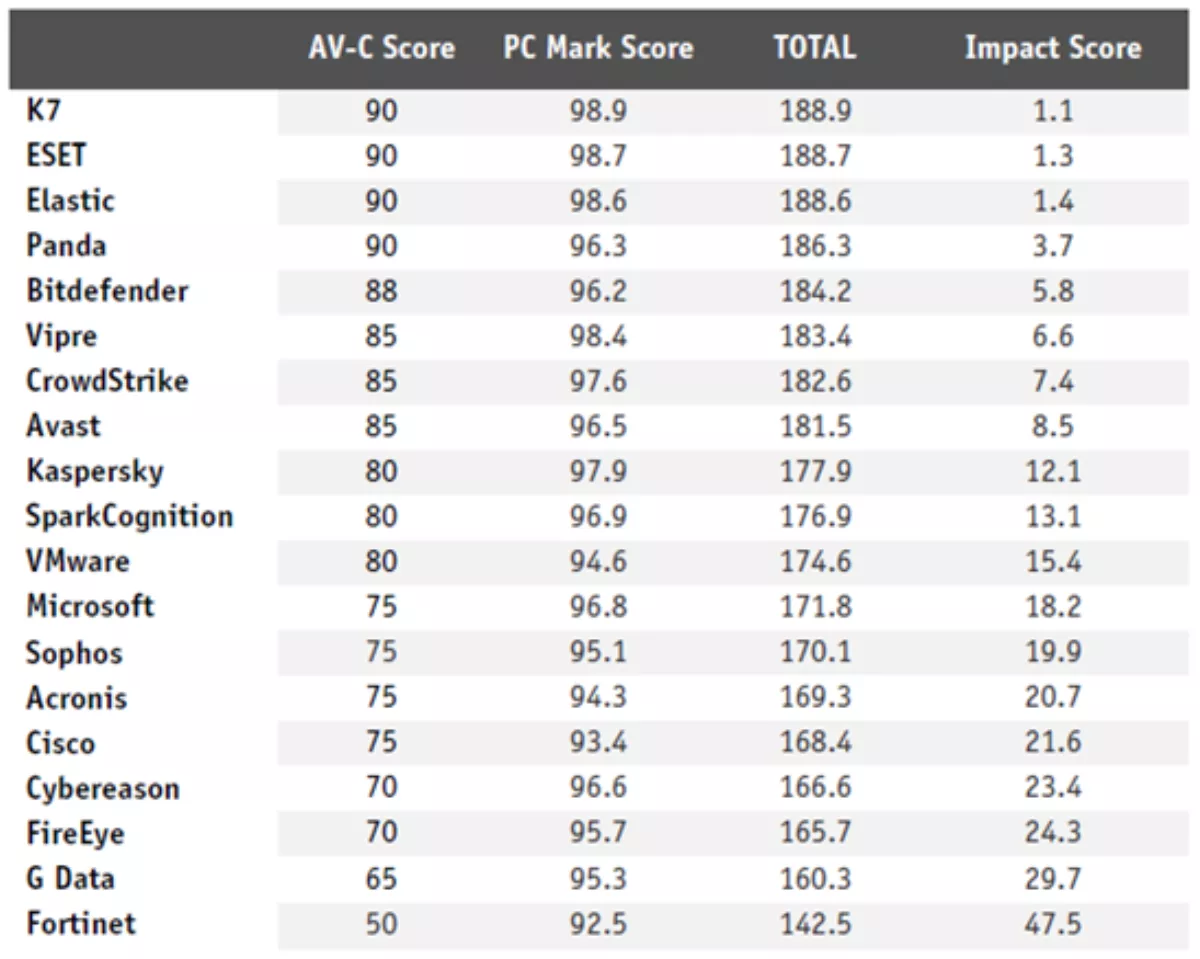

In their most recent Business Test, AV-Comparatives conducted two performance tests: the first being the recognized PC Mark benchmark and the second being a proprietary test consisted of a set of common operations. The test machines were what AV-Comparatives called “low-end machine configuration”: Intel Core i3 CPU system with 4GB of RAM.

In the PC Mark Test, the machine without any security software installed was assigned a baseline PC Mark Score of 100; the scores in this test ranged between 98.9 and 92.5 for the machines with a tested security software installed. ESET Endpoint Security was found the second most lightweight security solution with the score of 98.7. (The winner in this category, K7, seriously failed in both False Positive tests, so ESET Endpoint Security may be considered the fastest among leading security solutions.)

Microsoft Defender's impact on performance was found much heavier; with the score of 96.8, it ended up in eighth place.

In the proprietary Performance Test, the tester measured to what extent the security solutions slowed down the machine performing select standardized operations: File copying; Archiving and unarchiving; Installing/uninstalling applications; Launching applications; Downloading files; and Browsing Websites. ESET, along with three other vendors, achieved the best score of 90. Microsoft, along with three other vendors scored a meagre 75; only four security solutions slowed down the testing machine more than Microsoft Defender.

Combined, ESET achieved “Impact Score” 1.3, an excellent result confirming that ESET Endpoint Security has only negligible impact on performance. Microsoft, on the other hand, again proved it's a resource hog – see the table below.

Tab. 2. Results of the Performance Test within the Business Security Test, H1 2020, by AV-Comparatives

ESET's dominance over Microsoft in Performance Tests doesn't depend on whether the test machines are “low-end” or “high-end”. In their previous Business Test (August-November 2019), AV-Comparatives used test machines with Intel Core i7-8550U CPU and 8GB RAM. The results in both Performance Tests were similar, with ESET outperforming Microsoft in both tests.

In summary, although Microsoft Defender has gained market share and made some advancements in their offerings, it is not a silver bullet. There is often much more to consider when choosing an endpoint solution. Besides performance, other factors such as having local support from highly trained and certified personnel, as well as getting the appropriate service levels required to keep businesses secure, are also crucial in ensuring customers are happy in the long run… These have been the key parts of ESET's and Chillisoft's proposition in the New Zealand market for 21 years.