Researchers have switched on the world's fastest AI supercomputer

Researchers have switched on the world's fastest AI supercomputer, delivering nearly four exaFLOPS of AI performance for more than 7,000 researchers.

Perlmutter, officially dedicated today at the National Energy Research Scientific Computing Centre (NERSC), is a supercomputer that will help piece together a 3D map of the universe, probe subatomic interactions for green energy sources, and much more.

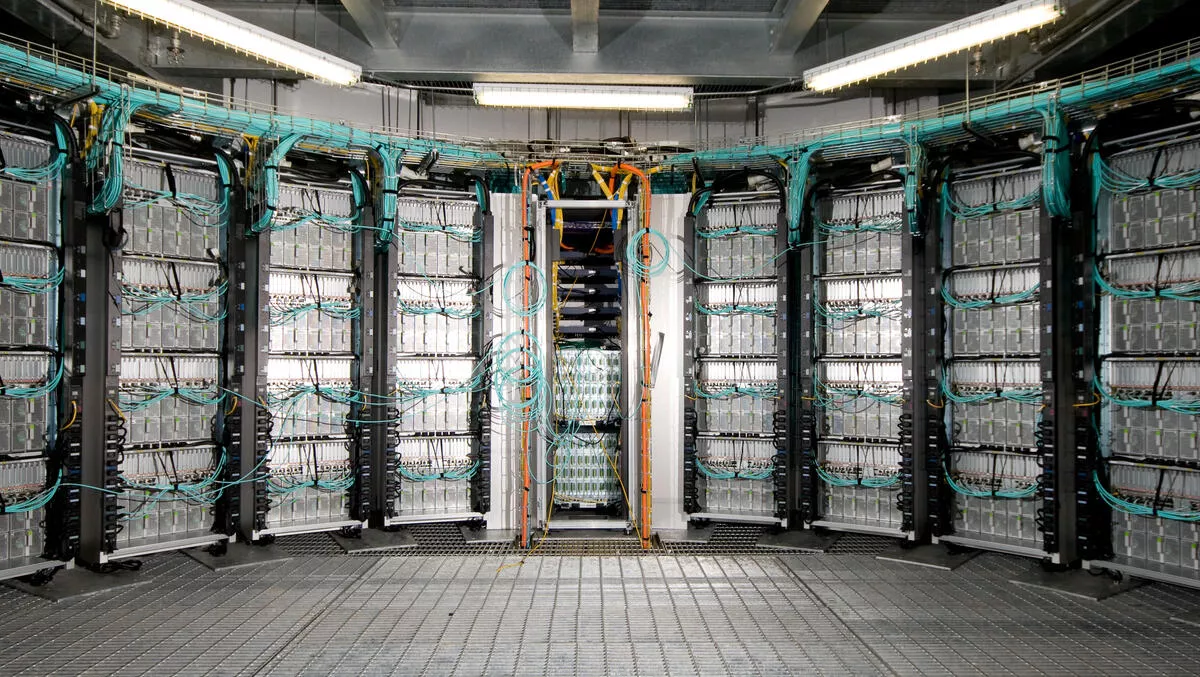

The supercomputer is made up of 6,159 NVIDIA A100 Tensor Core GPUs, which makes it the largest A100-powered system in the world. Over two dozen applications are getting ready to be among the first to use the system based at Lawrence Berkeley National Lab.

In one project, the supercomputer will help assemble the largest 3D map of the visible universe to date. It will process data from the Dark Energy Spectroscopic Instrument (DESI), a sort of cosmic camera that can capture as many as 5,000 galaxies in a single exposure.

DESI's map aims to shed light on dark energy, the little known physics behind the accelerating expansion of the universe. Dark energy was mostly discovered through the 2011 Nobel Prize-winning work of Saul Perlmutter, an active astrophysicist at Berkeley Lab who will help dedicate the new supercomputer named for him.

"I'm really happy with the 20x speed-ups we've gained on GPUs in our preparatory work," says NERSC data architect, Rollin Thomas, who is helping to set up the project and works with Saul Perlmutter.

Another use for the supercomputer will be work in materials science, aiming to discover atomic interactions that could lead to better batteries and biofuels.

Traditional supercomputers can barely handle the maths required to generate simulations of a few atoms over a few nanoseconds with programs such as Quantum Espresso. But by combining their highly accurate simulations with machine learning, scientists can study more atoms over longer stretches of time.

"In the past, it was impossible to do fully atomistic simulations of big systems like battery interfaces, but now scientists plan to use Perlmutter to do just that," says NERSC applications performance specialist, Brandon Cook.

"That's where Tensor Cores in the A100 play a unique role. They accelerate both the double-precision floating-point maths for simulations and the mixed-precision calculations required for deep learning.

Software is an important component of Perlmutter, the researchers at NERSC say there is support for OpenMP and other popular programming models in the NVIDIA HPC SDK the system uses.

They say RAPIDS, open source code for data science on GPUs, will speed the work of NERSC's growing team of Python programmers. In a project that analysed all the network traffic on NERSC's Cori supercomputer, it was nearly 600x faster than prior efforts on CPUs.

"That convinced us RAPIDS will play a major part in accelerating scientific discovery through data," says Thomas.

At the virtual launch event, NVIDIA CEO, Jensen Huang, congratulated the Berkeley Lab crew on its plans to advance science with the supercomputer.

"Perlmutter's ability to fuse AI and high-performance computing will lead to breakthroughs in a broad range of fields from materials science and quantum physics to climate projections, biological research and more," he says.

AI for science is a growth area at the U.S. Department of Energy, where proof of concepts are moving into production use cases in areas like particle physics, materials science, and bioenergy.

"People are exploring larger and larger neural-network models and there's a demand for access to more powerful resources," says NERSC acting lead for data and analytics, Wahid Bhimji.

"Perlmutter with its A100 GPUs, all-flash file system and streaming data capabilities is well-timed to meet this need for AI.