Why NVMe is headed for the mainstream this year

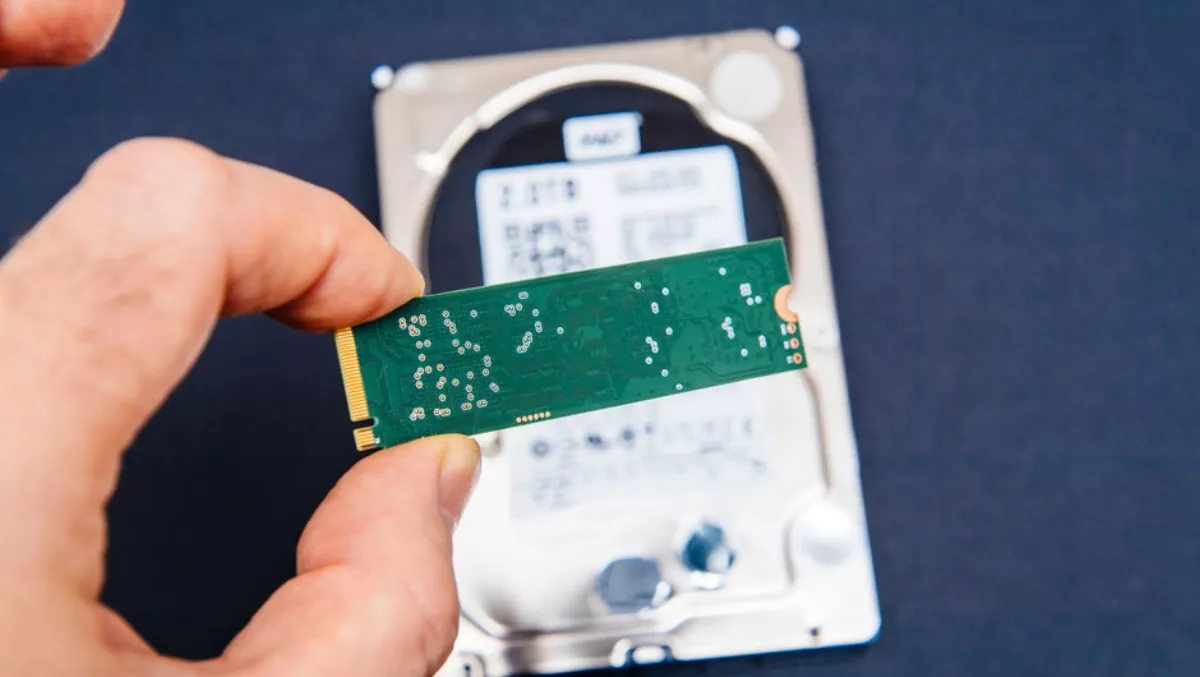

Five years ago, Non-Volatile Memory Express (NVMe) was an interesting but ultimately niche storage platform. Over time, its ability to replace the serial-attached SCSI (SAS) bottleneck with thousands of queues saw it become widely adopted in smartphones and laptops — small devices which need to efficiently access their storage.

All-flash storage architectures are fundamentally limited by SAS-based serial connectivity, as no matter how many CPU cores are used, or how dense the flash, all data must move serially.

With thousands of queues, NVMe enables compute and storage to engage in massively parallel communication, so everything moves faster.

What is NVMe?

NVMe is a protocol that accelerates the way CPUs and Solid State Drives (SSD) communicate. It replaces the existing SCSI protocol which has been around for more than three decades. SCSI places a message in a queue, which is essentially a stack of commands that the device will execute when it gets around to it.

Regardless of how advanced or expensive the network using the SCSI protocol is, every command is actioned one at time. The new NVMe world replaces this inefficient bottleneck within the backend of all flash arrays with massive parallelism — in fact, up to 64,000 queues and lockless connections that can provide each CPU core with dedicated queue access to each SSD.

While NVMe has worked wonders for personal devices, the next step involves bringing these advantages to systems that connect to storage over a network rather than PCI-express.

There's an open, non-proprietary standard to solve this: NVMe over Fabrics (NVMe-oF), the extension of NVMe to Ethernet and Fibre Channel storage networks. NVMe-oF takes the lightweight NVMe command set and the efficient NVMe queueing model, and adds an abstract interface to allow the PCIe transport to be replaced with other transports that provide reliable data movement.

NVMe picking up speed in 2019

As we approach 2019, NVMe is now making a play for big enterprise. It can speed up everything in an organisation's network, including databases, virtualised and containerised environments, developer initiatives and web-scale applications.

In fact, NVMe's huge throughput advantage over SAS is going to be required to take advantage of tomorrow's advances in multi-core CPUs, super-dense SSDs, new memory technologies and high-speed interconnects. Think artificial intelligence, machine learning and automation.

Large, complex industries like banking and eCommerce are especially well placed to reap the rewards. It's no secret that faster transactions per second mean more revenue per second, and NVMe enables an enterprise's entire system to work faster, directly increasing the bottom line.

NVMe-oF already provides consistent low latency performance, and the final piece of the puzzle that will see wide scale adoption of this technology by large enterprises such as banks or airlines, for example, will likely be the delivery of an end-to-end capability through the addition of NVMe-oF for front-end connectivity.

This will be particularly applicable to environments seeking better performance, even lower latency and less compute overhead. NVMe-oF makes all this possible. When technology can have a clear impact on revenue, it is hard to imagine that it won't become a popular choice.

Legacy will lose out

NVMe-oF means all storage can be accessed in microseconds, and for the end users, there's no difference between local, and remote high-speed network-connected storage. Any organisation that runs on a database stands to benefit, so it is only logical that the NVMe revolution will take another step up in 2019.

When it does, there's a chance that storage architectures that aren't prepared for NVMe — and there are many of them — will be left behind. Legacy arrays and flash retrofits, for instance, probably can't be upgraded to 100 per cent NVMe, and won't be able to take advantage of this powerful and efficient technology.